Executive Summary

This survey of software practitioners identifies some key trends in the quality engineering toolchain:

- Git is the most popular version control system and is likely to increase further in popularity.

- The Atlassian ecosystem is the most popular toolset within the Issue Management, test management and service management categories.

- Jenkins is the most popular continuous integration tool.

- SonarQube is the most popular static code analysis tool.

- The Microsoft stack (Team Foundation Server / Azure DevOps) has limited popularity, with the most popular use case being as a test management tool.

We found that about 50% of respondents pull this data into Microsoft Office products in order to put together reports and analytics. Finally we introduce the neuro tool that integrates with the popular systems in the toolchain and automated reporting and analytics. This reduces the cost and effort involved with quality monitoring and process improvement.

Introduction

Sometimes it seems like the tools we use for software quality and test engineering are continuously changing. People are always chasing the next best thing, and tools that will make them more productive and efficient. Two decades ago the tools we used were clunky Windows client applications, with very limited integration with other tools. This led to a surge in multi-use tools that locked us into specific vendor ecosystems for managing all of our requirements, tests and issues. Looking at the market in 2021 things are very different – we have web-based, responsive and usable tools. Not only that, but everything is API-enabled to allow us to spin up our own integrations.

At least, that’s what we thought – but we wanted to know what everyone else is doing – so we surveyed 75 people from different organisations about their toolchain. In this report we will present the results.

The respondents were from a variety of levels in their organisations. 2% of them were in executive or C-level positions, 11% of them were Heads of Testing or Quality, 15% Test or Quality Managers, 42% testing specialists of other types, ranging from test engineers to test coaches. The remaining 27% are in other technology roles. We will be referring to quality engineers throughout this report – and we mean anyone who is working on testing and quality.

Of the respondents, 27% decide which tools to use, 40% influence decisions about which tools to use, 20% approve tool decisions, and the remaining 10% don’t have direct influence on the tool selection.

We focused in this report on tools that relate to testing, but not test automation tools. We are more interested in the general tools, because test automation tools are usually chosen based on the underlying technology under test, whereas issue management systems are not. We also asked about source control, manual test management, service management, continuous integration, static code analysis, and reporting.

Version Control

It is difficult to imagine how we would develop software at the speed we do in 2021 without version control systems. The complexity of these is often abstracted into a tree and trunk diagram, but that is usually an over-simplification. In reality, each tip of the tree has multiple children that feedback into other tips. A better comparison than a tree is a directed acyclic graph. All version control systems provide some strategy for managing the complexity of multiple engineers working simultaneously on the graph, and needing to merge changes into their working copies.

Quality engineers generally did not use version control systems until test automation started to move away from commercial off-the-shelf tools with inbuilt IDEs. These days most quality engineers use general purpose programming languages with various open-source libraries to achieve test automation. They need to manage the versions of this code just like software developers, so version control skills are now critical for many in the profession. This also supports other modern automation practices, like including automated tests in a pipeline.

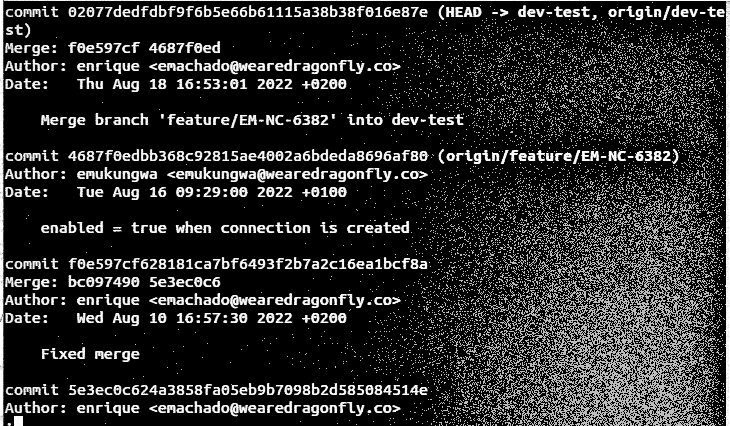

Without a doubt, Git is the winner in our survey. nearly 78% of respondents use Git, and just Git. A small number (7%) are still hanging on to subversion. We were surprised to see only a tiny proportion – less than 5% – using the Microsoft tools – Team Foundation Server and Azure DevOps.

Git has some great features. It is decentralised, so can be used without a central server, although that isn’t the main way people use it. It also provides cryptographic verification of history, which is immutable. These features are reminiscent of blockchain technology, although Git predates them by several years. We note that the largest group of respondents use a public cloud-hosted version control system.

In more likelihood, the prevalence of Git has been driven by the popularity of the Software as a Service (SaaS) systems wrapped around it by GitHub, GitLab, SourceForge and Atlassian’s BitBucket. On the other hand, only 31% of our respondents are using a public cloud system for their source control.

Stack Overflow conduct an annual developer survey and observed that the use of Git by respondents rose from 69% in 2015 to 93% in 2021, which supports our findings. We also noted that only 8% of respondents were planning to move away from their current tool, and only half of these were using Git, which indicates increasing adoption.

The first system designed to control multiple people working on the same code base originates from 1984 (Grune, 1984) at a university. This led to the release of CVS in 1985, and it was the dominant product until the release of Subversion (SVN) in 2001. SVN was built by CollabNet, a collaboration software provider. In 2005, the commercial CVS provider that donated licenses to the Linux project decided to stop. This led Linus Torvalds to evaluate alternative solutions, and he decided none were good enough for the world’s biggest open-source project. After two weeks of development he published the first release of Git.

Continuous Integration

The term Continuous Integration (CI) was first proposed in 1991, but it sometimes seems like it is still a practice many don’t fully embrace outside of development. CI tools are crucial for implementing modern quality engineering practices. Not only do they automate tasks but provide consistency and avoid human error. For quality engineers they provide a framework for executing unit tests as well as automated functional tests.

We found that the open-source Jenkins is the most popular tool, with 47% of respondents naming it as their current tool.

The next most popular tool was GitHub, at 15%. No other tool obtained more than 10% of respondents. Only 23% of respondents are using a public cloud system, with the majority using private cloud or on-premise systems. Again, 8% of respondents are planning to move away from their tool, but most of these seemed to be current Jenkins users, indicating its market share is likely to decline.

The 2020 JetBrains dev ecosystem survey found similar results, with 55% using Jenkins and 15% using GitHub Actions. They also found that 27% were using GitLab and had showed better results for Travis CI, Teamcity and GitLab – but we couldn’t reproduce those results.

The primary features required for a CI server are to support compiling code, running tests against the code and deployment of the code. Increasingly as infrastructure is being defined as code, it is not just software that is deployed through CI, but also servers and other infrastructure components.

Of the leading CI products available, there seems to be little difference in their ability to integrate with other tools, to manage different software ecosystems (cross-platform vs Windows), or even to be hosted on different operating systems. It seems the biggest difference is that Jenkins is open-source, and others are not. Some CI systems are SaaS only, which can give you less control over the build environment and make larger scale automated testing more challenging.

Researchers studied 246 GitHub projects that used some CI tool to investigate the productivity and quality impact of CI. They concluded that the use of CI:

- Has a significantly positive effect on productivity when measured in terms of pull requests being accepted vs rejected.

- Has no measurable effect on the amount of externally reported bug reports.

This is interesting because it shows that the use of CI alone does not drive quality. Google, however, does it right. They have 4.2 million tests running in their CI system. They calculated that they have 150 million test executions per day, which means each test runs on average 35 times a day.

So why do so many teams have a CI server, but not have effective tests running it? A systematic literature review by the University of Adelaide called out the following key challenges “Although automated testing is a key part of CI, many organisations studied were unable to automate all types of tests. Attributing this to lack of infrastructure and the time-consuming process of automating manual tests. Poor test quality, including unreliable tests, too many tests, low coverage, and long running tests.2

These issues obviously reduce confidence in the CI pipeline, which means it is relied upon less for testing, and simply becomes a build automation tool.

Issue Management

We weren’t particularly surprised to find that Jira is the most popular issue management tool out there. 69% of respondents mentioned Jira, Team Foundation Server and Azure DevOps were mentioned by 11%, and the remainder were not significant enough to mention statistically. One respondent used Excel, and two used Shortcut (previously clubhouse). One person still seems to be using MicroFocus ALM, but plans to move away. Not a single user of Jira identified a plan to move away from using it – indicating the pull of the Atlassian ecosystem.

Referring again to the 2020 Jetbrains dev ecosystem survey – they found similar results in terms of the Atlassian and Microsoft tools, but again showed better results for GitLab and GitHub. They also showed strong results for Trello, which is surprising given it is such a generic tool. Interestingly, in 2019, they also found that 61% of respondents used different tools for different teams within their organisation.

Around 31% of respondents are using a public cloud, and 21% are using on-premise versions, which is interesting given that Atlassian has retired support for on-premise Jira in favour of private cloud instances.

Issue management tools usually now provide an integrated way to manage requirements, tasks, and defects. Typically they also provide search functionality, agile boards, customisable workflows, and sometimes, time-tracking.

However, they are increasingly being used to measure the value streams of a software development organisation. Value stream management is not a new technique, it has its origins in manufacturing, like many process improvement techniques. It is a recognised part of the Six Sigma technique. In essence it is an lean-based approach to mapping the value added by different parts of the software development process, and to identify waste, and then manage it.

As issue management tools track the states of different types of work, they therefore provide a proxy for measuring where work is within a larger process. One example metric is the time that issues spend on average in each state. When this is compared between components, products and teams, it can provide useful insight to where inefficiencies are rooted.

Value stream management isn’t just about metrics though, it involves various process improvement techniques. Value stream mapping is the initial step, and includes seven tools:

- Process activity mapping

- Supply chain response matrix

- Production variety funnel

- Quality filter mapping

- Demand amplification mapping

- Decision point analysis

- Physical structure volume and value

While these may sound like they are somewhat distant from software development, they generally apply well.

Test Management

Test management tools usually support the planning and tracking of manual test activities, sometimes including tracking of automated test activities.

Often they also contain some requirements management or issue management functionality. In this case, where they are multi-use tools the line is blurred with issue management tools. In fact, Jira plugins Zephyr Squad and X-Ray have a combined 31% of the test management market, and they integrate directly through the Jira web interface. This again indicates the strength of the Atlassian ecosystem.

Test management tools paint a very different picture to the rest of the toolchain. 18% of respondents don’t use any test management tool. There is also no clear market leader. The next most prevalent system after Atlassian was Excel, which is a limited tool for tracking testing, but can be enough. In this category, the Microsoft Stack (Azure DevOps and Team Foundation Server) did better than in other categories – with 10% of respondents using it for tests.

Around 21% of respondents are using a public cloud tool – consistent with other categories.

Service Management

IT Service Management tools are orientated towards handling incidents, service requests, problems and changes. Information from these tools is important to understand the quality of a system when it is in use.

Many respondents did not use a service management tool – in fact 40%. That makes service management the least popular tool to implement from our list. Jira Service Management and HP Service Now both received 20% of the mentions. With no other tools having a significant share. Jira’s Service Management offering is certainly suitable for startups but doesn’t have the scale required for a large corporate environment, where HP Service Now continues to be the most popular tool.

Most people that are planning to implement a different tool are either not using one at the moment, or are using Jira, which could indicate that some companies may have outgrown it.

Static Code Analysis

Static analysis is usually the use of a tool to analyse software without executing it. This is a useful tool for tracking metrics that may point to quality issues in software. These tools use data-driven or rule-based processes. Data driven approaches increasingly use machine learning to identify potential areas of concern.

Static code analysis is an important technique for increasing maintainability and improving security and nearly 80% of respondents identified a tool they use.

SonarQube is the most prevalent at 39%, with in-IDE tools the next most prevalent at 21%. Only 16% of respondents are using public cloud, which is indicative of the popularity of in-IDE rather than server-led systems.

Delivering feedback to developers in real time through in-IDE tools is good for the efficiency of the process, as the feedback is inline. This process does not provide system-wide quality metrics though, which a server-led solution typically supports.

Reporting and Analytics

In today’s data-driven world we are all trying to measure as much as possible. Especially with so much remote working. So how do people get usable information out of all these tools for insight and consumption?

Only 32% of respondents indicated they had integrated management information processes coming from these tools, which is low for any business process. 49% of respondents are using tools like Excel and PowerPoint to prepare reports, and 19% are using business intelligence platforms.

Interestingly, more people said they are writing custom code than are using the dashboards or reporting available from the data source tools themselves.

The average respondent had 2.59 of the integrations we asked them about. The super-integrators tend to use Jira, Jenkins and Veracode.

Integrating different tools together in the software toolchain can significantly improve the information that can be obtained from any one tool, and also improve usability.

We asked about the following integrations:

- Integration of test automation into test management tools

- Integration of issue management and test management tools

- Integration of service management and issue management tools

- Integration of static code analysis and CI tools

- Integration of source control and issue management tools

People who use generic business intelligence software to craft their own reporting are more likely to have more integrations between their tools. The most popular integrations are issue management and source control, and issue management and test management. 62% of respondents have these integrations. The least popular integration is issue management and service management, with just 39% of respondents reporting.

Reporting and analytics functionality is crucial to understand the large volume of data coming from the modern quality engineering toolchain. This data can be used to monitor and control an individual test process, or to analyse retrospective data, or to compare it to industry benchmarks.

Nearly 20% are using general purpose business intelligence software for reporting. Configuring general and multi-purpose tools for reporting information specific quality engineering is often a time consuming activity. Using tools specifically designed for development and testing data can save a lot of that time.

In that context, it is notable that only 4% of respondents use the reporting functionality in the toolchain applications themselves. This shows that they are clearly not getting value from each tools individuals capabilities.

Speaking generally, analytics and reportings systems can:

- Allow the dynamic analysis of value streams considering multiple sources of data.

- Produce regular reporting on quality activities in presentable form.

- Adapt to different contexts quickly — e.g. product, team, process.

- Support quality improvement, or lean methods like value mapping.

Linking operational data about the code base to the issues, tests, incidents relating to that code enables predictive analytics. Increasingly, the data in these systems can be used with machine learning models to predict faults in your products and processes.

Neuro helps you harness your data from your toolchain to create high performing engineering and quality teams. It provides complete visibility of your engineering and quality performance.

- Goal setting — Close the gap between insight and action. Set user-defined goals to track progress, identify roadblocks and align engineering and quality teams with busines priorities.

- Consistency — Deliver consistency across your agile portfolio. Address the challenge of managing dependencies among teams, especially third parties.

- Automated reporting layer — Provide rapid reporting across your toolstack. Smart dashboards provide greater insight and reduce administrative overhead with automated reporting.

Neuro integrates with Jira, Zephyr and X-Ray. It also consumes test automation meta-data from Cucumber and Jenkins, and source control history from Git-based products. Integrate your quality engineering tool-chain with built in integrations and a REST API for adding your own.