Every developer has heard of Git. Originally authored by Linus Torvalds in 2005 to track the development of the Linux kernel, Git has become the go to version control system for 99.99% of development teams on the planet. With such widespread adoption, it seems obvious that everyone from heads of development to testing and quality specialists would be making the most out of the mountains of git data generated during the software development cycle. This, however, isn’t the case. Trying to draw conclusions from random commits across dozens of branches manually is a long and arduous process that would, simply put, be a huge waste of time.

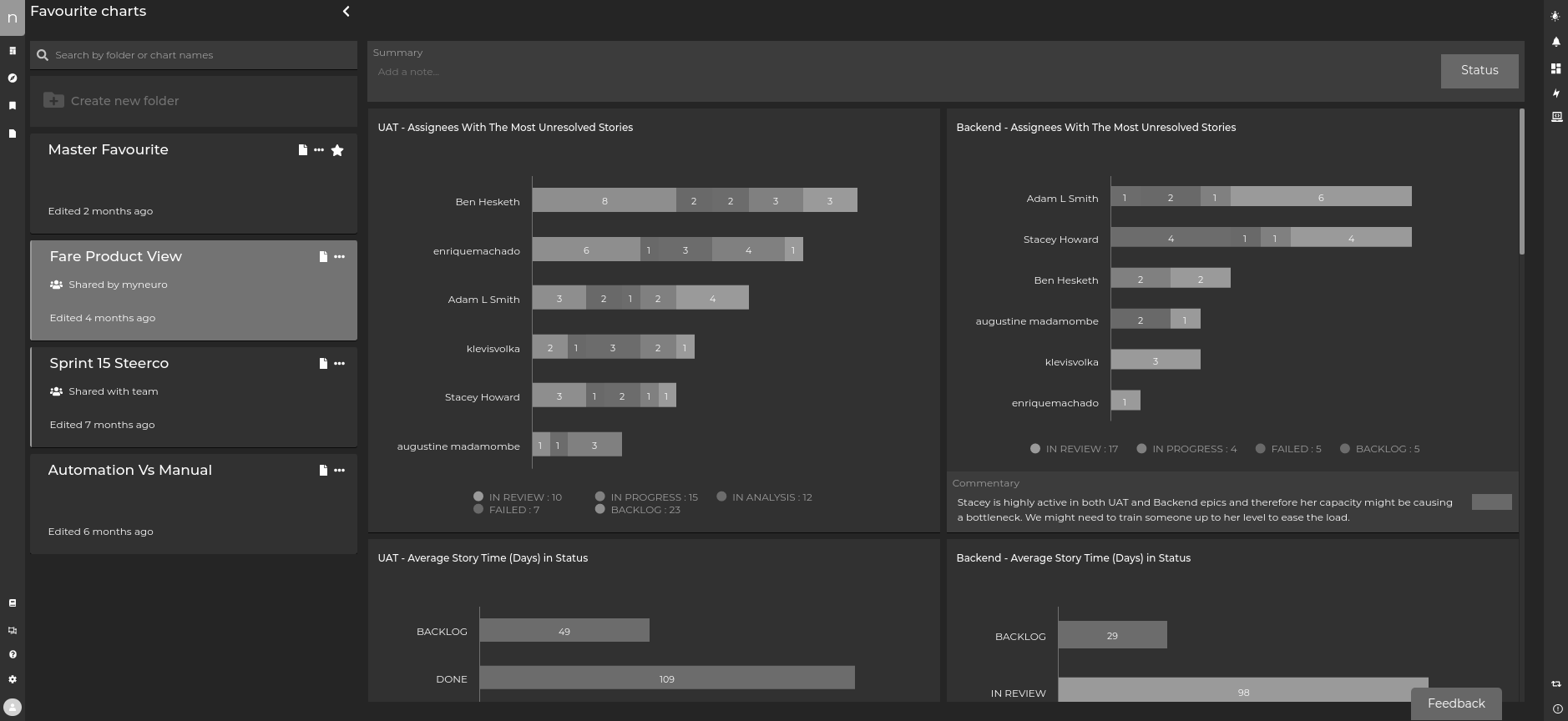

We saw the potential of leveraging this data but knew that we needed to collect and present it in a digestible way. Enter Neuro. Using machine learning we can look at a commit and establish its risk using metrics such as developer experience, lines added, the number of files affected, and many more.

Take control of your code

Take the role of a dev team leader. You know that your newest member, a junior developer fresh out of university, is more likely to introduce a bug than a senior developer that’s deeply familiar with the codebase. You also know which devs worked in which directories the most, their strengths and weaknesses, their areas of interest. In regards to regression testing, you have experience and knowledge that allows you to predict which tests are most likely to fail. You’ve maybe done a bit of risk-based prioritization to determine what to keep a closer eye on. Nearly all of this knowledge is instinct based. Neuro takes a much more data driven approach to identify where bugs are more likely to be introduced, and does this in real time for every new commit.

Where are all the bugs?

A few metrics that factor into risk predictions were mentioned above, however there are many more. Each of these fall into one of three levels of insight. The first level covers things like the number of lines modified, how many files and directories are affected by the commit etc. The second level concerns the people who worked on the commit. Research has shown that it’s not source code metrics, but people and organizational processes that are the best predictors for defects. As mentioned before, a team leader might be deeply familiar with each of their devs and instinctively know which commits are high risk, but by looking at git data such as recent experience, whether devs have experience working in the directory, and how many people made changes to a commit, Neuro can make predictions in real time for the risk of every commit. The third and final level of insight is looking at previous bugs. How many bugs were introduced last time this file was modified? Which commits are most likely to introduce regression bugs? Using data from these three levels of insight we’ve created a system that tracks every commit in a project and categorises it as Low, Medium or High risk.

Give your test team the power

So what does this mean for the average tester? Simply put, Neuro leverages the huge amount of git data generated with every commit to draw attention to risky commits. It takes the instinctive knowledge gained from years of experience with a dev team and backs it up with data that even the most inexperienced tester can use to inform testing.

Thank you for reading.